Improve Media content library

understanding using

Human in the loop enabled AI to improve user engagement

In today’s world binge watching has become a way of life not just for Gen-Z but also for many baby boomers. Viewers are watching more content than ever. In particular, Over-The-Top (OTT) and Video-On-Demand (VOD) platforms provide a rich selection of content choices anytime, anywhere, and on any screen. With proliferating content volumes, media companies are facing challenges in preparing and managing their content. This is crucial to provide a high-quality viewing experience and better monetizing content. Some of the use cases involved are,

- Finding opening of credits, Intro start, Intro end, recap start, recap end and other video segments.

- Choosing the right spots to insert advertisements to ensure logical pause for users.

- Creating automated personalized trailers by getting interesting themes from videos.

- Identify audio and video synchronization issues.

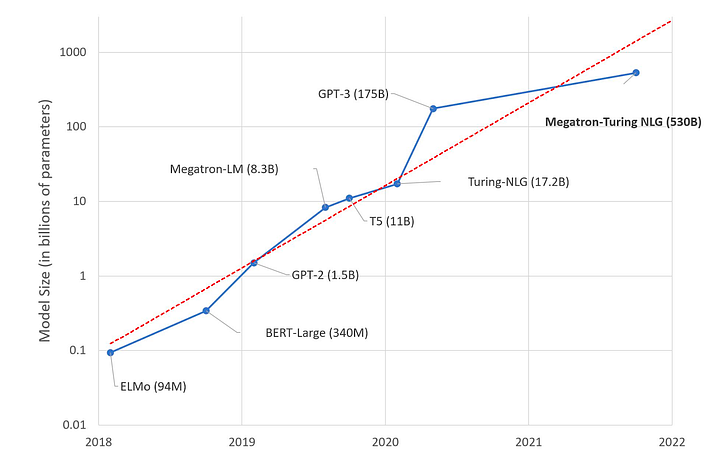

While these approaches were traditionally handled by large team of trained human workforces, many AI based approaches have evolved such as Amazon Rekognition’s video segmentation API. AI models are getting better at addressing above mentioned use cases but they are typically pre-trained on a different types of content and may not be accurate for your content library. So, what if we use AI enabled human in the loop approach to reduce cost and improve accuracy of video segmentation tasks.

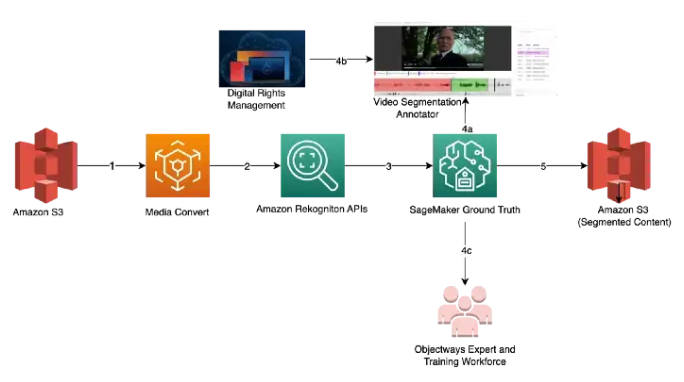

In our approach, the AI based APIs can provide weaker labels to detect video segments and send for review to trained human reviewers for creating picture perfect segments. The approach tremendously improves your media content understanding and helps generate ground truth to fine-tune AI models. Below is workflow of end-2-end solution,

- Raw media content is uploaded to Amazon S3 cloud storage. The content may need to be preprocessed or transcoded to make it suitable for streaming platform(e.g convert to .mp4, upsample or downsample)

- AWS Elemental MediaConvert transcodes file-based content into live stream assets quickly and reliably. Convert content libraries of any size for broadcast and streaming. Media files are transcoded to .mp4 format

- Amazon Rekognition Video provides an API that identifies useful segments of video, such as black frames and end credits.

- PropensityLabs has developed a Video segmentation annotator custom workflow with SageMaker Ground Truth labeling service that can ingest labels from Amazon Rekognition. Optionally, you can skip step#3 if you want to create your own labels for training custom ML model or applying directly to your content.

- The content may have privacy and digital rights management requirements and protection. The propensitylabs’s Video Segmentation tool also supports Digital Rights Management provider integration to ensure only authorized analyst can look at the content. Moreover, the content analysts operate out of SOC2 TYPE2 compliant facilities where no downloads or screen capture are allowed.

- The PropensityLabs Media analyst team provides throughput and quality guarantees and continue to deliver daily throughput depending on your business needs. The segmented content labels are then saved to Amazon S3 as JSON manifest format and can be directly ingested into your Media streaming platform.

Conclusion:

Artificial intelligence(AI) has become ubiquitous in Media and Entertainment to improve content understanding to increase user engagement and also drive ad revenue. The AI enabled Human in the loop approach outlined is best of breed solution to reduce the human cost and provide highest quality. The approach can be also extended to other use cases such as content moderation, ad placement and personalized trailer generation.

RECOMMENDED Blogs

Discover how our enterprise solutions drive innovation and growth by leveraging ML and LLMs.